Eye movement in music reading

Eye movement in music reading is the scanning of a musical score by a musician's eyes. This usually occurs as the music is read during performance, although musicians sometimes scan music silently to study it. The phenomenon has been studied by researchers from a range of backgrounds, including cognitive psychology and music education. These studies have typically reflected a curiosity among performing musicians about a central process in their craft, and a hope that investigating eye movement might help in the development of more effective methods of training musicians' sight reading skills.

A central aspect of music reading is the sequence of alternating saccades and fixations, as it is for most oculomotor tasks. Saccades are the rapid ‘flicks’ that move the eyes from location to location over a music score. Saccades are separated from each other by fixations, during which the eyes are relatively stationary on the page. It is well established that the perception of visual information occurs almost entirely during fixations and that little if any information is picked up during saccades.[1] Fixations comprise about 90% of music reading time, typically averaging 250–400 ms in duration.[2]

Eye movement in music reading is an extremely complex phenomenon that involves a number of unresolved issues in psychology, and which requires intricate experimental conditions to produce meaningful data. Despite some 30 studies in this area over the past 70 years, little is known about the underlying patterns of eye movement in music reading.

Relationship with eye movement in language reading

Eye movement in music reading may at first appear to be similar to that in language reading, since in both activities the eyes move over the page in fixations and saccades, picking up and processing coded meanings. However, it is here that the obvious similarities end. Not only is the coding system of music nonlinguistic; it involves what is apparently a unique combination of features among human activities: a strict and continuous time constraint on an output that is generated by a continuous stream of coded instructions. Even the reading of language aloud, which, like musical performance involves turning coded information into a musculoskeletal response, is relatively free of temporal constraint—the pulse in reading aloud is a fluid, improvised affair compared with its rigid presence in most Western music. It is this uniquely strict temporal requirement in musical performance that has made the observation of eye movement in music reading fraught with more difficulty than that in language reading.

Another critical difference between reading music and reading language is the role of skill. Most people become reasonably efficient at language reading by adulthood, even though almost all language reading is sight reading.[3] By contrast, some musicians regard themselves as poor sight readers of music even after years of study. Thus, the improvement of music sight reading and the differences between skilled and unskilled readers have always been of prime importance to research into eye movement in music reading, whereas research into eye movement in language reading has been more concerned with the development of a unified psychological model of the reading process.[4] It is therefore unsurprising that most research into eye movement in music reading has aimed to compare the eye movement patterns of the skilled and the unskilled.

Equipment and related methodology

From the start, there were basic problems with eye-tracking equipment. The five earliest studies[5] used photographic techniques. These methods involved either training a continuous beam of visible light onto the eye to produce an unbroken line on photographic paper, or a flashing light to produce a series of white spots on photographic paper at sampling intervals around 25 ms (i.e., 40 samples a second). Because the film rolled through the device vertically, the vertical movement of the eyes in their journey across the page was either unrecorded[6] or was recorded using a second camera and subsequently combined to provide data on both dimensions, a cumbersome and inaccurate solution.

These systems were sensitive to even small movement of the head or body, which appear to have significantly contaminated the data. Some studies used devices such as a headrest and bite-plate to minimise this contamination, with limited success, and in one case a camera affixed to a motorcycle helmet—weighing nearly 3 kg—which was supported by a system of counterbalancing weights and pulleys attached to the ceiling.[7] In addition to extraneous head movement, researchers faced other physical, bodily problems. The musculoskeletal response required to play a musical instrument involves substantial body movement, usually of the hands, arms and torso. This can upset the delicate balance of tracking equipment and confound the registration of data. Another issue that affects almost all unskilled keyboardists and a considerable proportion of skilled keyboardists is the common tendency to frequently glance down at the hands and back to the score during performance. The disadvantage of this behaviour is that it causes signal dropout in the data every time it occurs, which is sometimes up to several times per bar.[8] When participants are prevented from looking down at their hands, typically the quality of their performance is degraded. Rayner & Pollatsek (1997:49) wrote that:

- "even skilled musicians naturally look at their hands at times. ... [Because] accurate eye movement recording [is generally incompatible with] these head movements ... musicians often need appreciable training with the apparatus before their eye movements can be measured."

Since Lang (1961), all reported studies into eye movement in music reading, aside from Smith (1988), appear to have used infrared tracking technology. However, research into the field has mostly been conducted using less than optimal equipment. This has had a pervasive negative impact on almost all research up until a few recent studies. In summary, the four main equipment problems have been that tracking devices:

- measured eye movement inaccurately or provided insufficient data;

- were uncomfortable for participants and therefore risked a reduction in ecological validity;

- did not allow for the display of records of eye movement in relation to the musical score, or at least made such a display difficult to achieve; and

- were adversely affected by most participants' tendency to look down at their hands, to move their bodies significantly during performance, and to blink.

Not until recently has eye movement in music reading been investigated with more satisfactory equipment. Kinsler and Carpenter (1995) were able to identify eye position to within 0.25º, that is, the size of the individual musical notes, at intervals of 1 ms. Truitt et al. (1997) used a similarly accurate infrared system capable of displaying a movement window and integrated into a computer-monitored musical keyboard. Waters & Underwood (1998) used a machine with an accuracy of plus or minus one character space and a sampling interval of only 4 ms.

Tempo and data contamination

Most research into eye movement in music reading has primarily aimed to compare the eye movement patterns of skilled and unskilled performers.[9] The implicit presumption appears to have been that this might lay the foundation for developing better ways of training musicians. However, there are significant methodological problems in attempting this comparison. Skilled and unskilled performers typically sight read the same passage at different tempos and/or levels of accuracy. At a sufficiently slow tempo, players over a large range of skill-levels are capable of accurate performance, but the skilled will have excess capacity in their perception and processing of the information on the page. There is evidence that excess capacity contaminates eye-movement data with a ‘wandering’ effect, in which the eyes tend to stray from the course of the music. Weaver (1943:15) implied the existence of the wandering effect and its confounding influence, as did Truitt et al. (1997:51), who suspected that at slow tempo their participants' eyes were "hanging around rather than extracting information". The wandering effect is undesirable, because it is an unquantifiable and possibly random distortion of normal eye movement patterns.

Souter (2001:81) claimed that the ideal tempo for observing eye movement is a range lying between one that is so fast as to produce a significant level of action slips, and one that is so slow as to produce a significant wandering effect. The skilled and the unskilled have quite different ranges for sight reading the same music. On the other hand, a faster tempo may minimise excess capacity in the skilled, but will tend to induce inaccurate performance in the unskilled; inaccuracies rob us of the only evidence that a performer has processed the information on the page, and the danger cannot be discounted that feedback from action-slips contaminates eye movement data.

Almost all studies have compared temporal variables among participants, chiefly the durations of their fixations and saccades. In these cases, it is self-evident that useful comparisons require consistency in performance tempo and accuracy within and between performances. However, most studies have accommodated their participants’ varied performance ability in the reading of the same stimulus, by allowing them to choose their own tempo or by not strictly controlling that tempo. Theoretically, there is a relatively narrow range, referred to here as the ‘optimal range’, in which capacity matches the task at hand; on either side of this range lie the two problematic tempo ranges within which a performer’s capacity is excessive or insufficient, respectively. The location of the boundaries of the optimal range depends on the skill-level of an individual performer and the relative difficulty of reading/performing the stimulus.[10]

Thus, unless participants are drawn from a narrow range of skill-levels, their optimal ranges will be mutually exclusive, and observations at a single, controlled tempo will be likely to result in significant contamination of eye movement data. Most studies have sought to compare the skilled and the unskilled in the hope of generating pedagogically useful data; aside from Smith (1988), in which tempo itself was an independent variable, Polanka (1995), who analysed only data from silent preparatory readings, and Souter (2001), who observed only the highly skilled, none has set out to control tempo strictly. Investigators have apparently attempted to overcome the consequences of the fallacy by making compromises, such as (1) exercising little or no control over the tempos at which participants performed in trials, and/or (2) tolerating significant disparity in the level of action slips between skilled and unskilled groups.

This issue is part of the broader tempo/skill/action-slip fallacy, which concerns the relationship between tempo, skill and the level of action slips (performance errors).[11] The fallacy is that it is possible to reliably compare the eye movement patterns of skilled and unskilled performers under the same conditions.

Musical complexity

Many researchers have been interested in learning whether fixation durations are influenced by the complexity of the music. At least three types of complexity need to be accounted for in music reading: the visual complexity of the musical notation; the complexity of processing visual input into musculoskeletal commands; and the complexity of executing those commands. For example, visual complexity might be in the form of the density of the notational symbols on the page, or of the presence of accidentals, triplet signs, slurs and other expression markings. The complexity of processing visual input into musculoskeletal commands might involve a lack of 'chunkability' or predictability in the music. The complexity of executing musculoskeletal commands might be seen in terms of the demands of fingering and hand position. It is in isolating and accounting for the interplay between these types that the difficulty lies in making sense of musical complexity. For this reason, little useful information has emerged from investigating the relationship between musical complexity and eye movement.

Jacobsen (1941:213) concluded that "the complexity of the reading material influenced the number and the duration of [fixations]"; where the texture, rhythm, key and accidentals were "more difficult", there was, on average, a slowing of tempo and an increase in both the duration and the number of fixations in his participants. However, performance tempos were uncontrolled in this study, so the data on which this conclusion was based are likely to have been contaminated by the slower tempos that were reported for the reading of the more difficult stimuli.[12] Weaver (1943) claimed that fixation durations—which ranged from 270–530 ms—lengthened when the notation was more compact and/or complex, as Jacobsen had found, but did not disclose whether slower tempos were used. Halverson (1974), who controlled tempo more closely, observed a mild opposite effect. Schmidt's (1981) participants used longer fixation durations in reading easier melodies (consistent with Halverson); Goolsby's (1987) data mildly supported Halverson's finding, but only for skilled readers. He wrote "both Jacobsen and Weaver ... in letting participants select their own tempo found the opposite effect of notational complexity".[13]

On balance, it appears likely that under controlled temporal conditions, denser and more complex music is associated with a higher number of fixations, of shorter mean duration. This might be explained as an attempt by the music-reading process to provide more frequent 'refreshment' of the material being held in working memory, and may compensate for the need to hold more information in working memory.[14]

Reader skill

Here, there is no disagreement among the major studies, from Jacobsen (1941) to Smith (1988): skilled readers appear to use more and shorter fixations across all conditions than do the unskilled. Goolsby (1987) found that mean 'progressive' (forward-moving) fixation duration was significantly longer (474 versus 377 ms) and mean saccade length significantly greater for the less skilled. Although Goolsby did not report the total reading durations of his trials, they can be derived from the mean tempos of his 12 skilled and 12 unskilled participants for each of the four stimuli.[15] His data appear to show that the unskilled played at 93.6% of the tempo of the skilled, and that their mean fixation durations were 25.6% longer.

This raises the question as to why skilled readers should distribute more numerous and shorter fixations over a score than the unskilled. Only one plausible explanation appears in the literature. Kinsler & Carpenter (1995) proposed a model for the processing of music notation, based on their data from the reading of rhythm patterns, in which an iconic representation of each fixated image is scanned by a 'processor' and interpreted to a given level of accuracy. The scan ends when this level cannot be reached, its end-point determining the position of the upcoming fixation. The time taken before this decision depends on the complexity of a note, and is presumably shorter for skilled readers, thus promoting more numerous fixations of shorter duration. This model has not been further investigated, and does not explain what advantage there is to using short, numerous fixations. Another possible explanation is that skilled readers maintain a larger eye–hand span and therefore hold a larger amount of information in their working memory; thus, they need to refresh that information more frequently from the music score, and may do so by refixating more frequently.[16]

Stimulus familiarity

The more familiar readers become with a musical excerpt, the less their reliance on visual input from the score and the correspondingly greater reliance on their stored memory of the music. On logical grounds, it would be expected that this shift would result in fewer and longer fixations. The data from all three studies into eye movement in the reading of increasingly familiar music support this reasoning. York's (1952) participants read each stimulus twice, with each reading preceded by a 28-second silent preview. On average, both skilled and unskilled readers used fewer and longer fixations during the second reading. Goolsby's (1987) participants were observed during three immediately successive readings of the same musical stimulus. Familiarity in these trials appeared to increase fixation duration, but not nearly as much as might have been expected. The second reading produced no significant difference in mean fixation duration (from 422 to 418 ms). On the third encounter, mean fixation duration was higher for both groups (437 ms) but by a barely significant amount, thus mildly supporting York's earlier finding. The smallness of these changes might be explained by the unchallenging reading conditions in the trials. The tempo of MM120 suggested at the start of each of Goolsby's trials appears to be slow for tackling the given melodies, which contained many semibreves and minims, and there may have simply been insufficient pressure to produce significant results. A more likely explanation is that the participants played the stimuli at faster tempos as they grew more familiar with them through the three readings. (The metronome was initially sounded, but was silent during the performances, allowing readers to vary their pace at will.) Thus, it is possible that two influences were at odds with each other: growing familiarity may have promoted low numbers of fixations, and long fixation durations, while faster tempo may have promoted low numbers and short durations. This might explain why mean fixation duration fell in the opposite direction to the prediction for the second encounter, and by the third encounter had risen by only 3.55% across both groups.[17] (Smith's (1988) results, reinforced by those of Kinsler & Carpenter (1995), suggest that faster tempos are likely to reduce both the number and duration of fixations in the reading of a single-line melody. If this hypothesis is correct, it may be connected with the possibility that the more familiar a stimulus, the less the workload on the reader's memory.)

Top–down/bottom–up question

There was considerable debate from the 1950s to 1970s as to whether eye movement in language reading is solely or mainly influenced by (1) the pre-existing (top–down) behavioural patterns of an individual's reading technique, (2) the nature of the stimulus (bottom–up), or (3) both factors. Rayner et al. (1971) provides a review of the relevant studies.

Decades before this debate, Weaver (1943) had set out to determine the (bottom–up) effects of musical texture on eye movement. He hypothesised that vertical compositional patterns in a two-stave keyboard score would promote vertical saccades, and horizontal compositional patterns horizontal saccades. Weaver's participants read a two-part polyphonic stimulus in which the musical patterns were strongly horizontal, and a four-part homophonic stimulus comprising plain, hymn-like chords, in which the compositional patterns were strongly vertical. Weaver was apparently unaware of the difficulty of proving this hypothesis in the light of the continual need to scan up and down between the staves and move forward along the score. Thus, it is unsurprising that the hypothesis was not confirmed.

Four decades later, when evidence was being revealed of the bottom–up influence on eye movement in language reading, Sloboda (1985) was interested in the possibility that there might be an equivalent influence on eye movement in music reading, and appeared to assume that Weaver's hypothesis had been confirmed. "Weaver found that [the vertical] pattern was indeed used when the music was homophonic and chordal in nature. When the music was contrapuntal, however, he found fixation sequences which were grouped in horizontal sweeps along a single line, with a return to another line afterwards."[18] To support this assertion, Sloboda quoted two one-bar fragments taken from Weaver's illustrations that do not appear to be representative of the overall examples.[19]

Although Sloboda's claim may be questionable, and despite Weaver's failure to find dimensional links between eye movement and stimulus, eye movement in music reading shows clear evidence in most studies—in particular, Truit et al. (1997) and Goolsby (1987)—of the influence of bottom–up graphical features and top–down global factors related to the meaning of the symbols.

Peripheral visual input

The role of peripheral visual input in language reading remains the subject of much research. Peripheral input in music reading was a particular focus of Truitt et al. (1997). They used the gaze-contingency paradigm to measure the extent of peripheral perception to the right of a fixation. This paradigm—also known as the "moving window technique", involves the spontaneous manipulation of a display in direct response to where the eyes are gazing at any one point of time. Performance was degraded only slightly when four crotchets to the right were presented as the ongoing preview, but significantly when only two crotchets were presented. Under these conditions, peripheral input extended over a little more than a four-beat measure, on average. For the less skilled, useful peripheral perception extended from half a beat up to between two and four beats. For the more skilled, useful peripheral perception extended up to five beats.

Peripheral visual input in music reading is clearly in need of more investigation, particularly now that the moving-window technique has become more accessible to researchers. A case could be made that Western music notation has developed in such a way as to optimise the use of peripheral input in the reading process. Noteheads, stems, beams, barlines and other notational symbols are all sufficiently bold and distinctive to be useful when picked up peripherally, even when at some distance from the fovea. The upcoming pitch contour and prevailing rhythmic values of a musical line can typically be ascertained ahead of foveal perception. For example, a run of continuous semiquavers beamed together by two thick, roughly horizontal beams, will convey potentially valuable information about rhythm and texture, whether to the right on the currently fixated stave, or above, or above or below in a neighbouring stave. The is reason enough to suspect that the peripheral preprocessing of notational information is a factor in fluent music reading, just as it has been found to be the case for language reading. This would be consistent with the findings of Smith (1988) and Kinsler & Carpenter (1995), who reported that the eyes do not fixate on every note in the reading of melodies.

Refixation

A refixation is a fixation on information that has already been fixated on during the same reading. In the reading of two-stave keyboard music, there are two forms of refixation: (1) up or down within a chord, after the chord has already been inspected on both staves (vertical refixation), and (2) leftward refixation to a previous chord (either back horizontally on the same stave or diagonally to the other stave). These are analogous to Pollatsek & Rayner’s two categories of refixation in the reading of language: (1) “same-word rightward refixation”, i.e., on different syllables in the same word, and (2) “leftward refixation” to previously read words (also known as “regression”).[20]

Leftward refixation occurs in music reading at all skill-levels.[21] It involves a saccade back to the previous note/chord (occasionally even back two notes/chords), followed by at least one returning saccade to the right, to regain lost ground. Weaver reported that leftward regressions run from 7% to a substantial 23% of all saccades in the sight-reading of keyboard music. Goolsby and Smith reported significant levels of leftward refixation across all skill-levels in the sight-reading of melodies.[21]

Looking at the same information more than once is, prima facie, a costly behaviour that must be weighed against the need to keep pace with the tempo of the music. Leftward refixation involves a greater investment of time than vertical refixation, and on logical grounds is likely to be considerably less common. For the same reason, the rates of both forms of refixation are likely to be sensitive to tempo, with lower rates at faster speed to meet the demand for making swifter progress across the score. Souter confirmed both of these suppositions in the skilled sight-reading of keyboard music. He found that at slow tempo (one chord a second), 23.13% (SD 5.76%) of saccades were involved in vertical refixation compared with 5.05% (4.81%) in leftward refixation (p < 0.001). At fast tempo (two chords a second), the rates were 8.15% (SD 4.41%) for vertical refixation compared with 2.41% (2.37%) for leftward refixation (p = 0.011). These significant differences occurred even though recovery saccades were included in the counts for leftward refixations, effectively doubling their number. The reductions in the rate of vertical refixation upon the doubling of tempo was highly significant (p < 0.001), but for leftward refixation was not (p = 0.209), possibly because of the low baseline.[22]

Eye–hand span

The eye–hand span (EHS) is the separation between eye position on the score and hand position. It can be measured in two ways: in notes (the number of notes between hand and eye; the 'note index'), or in time (the length of time between fixation and performance; the 'time index'). The main findings in relation to the eye–voice span in the reading aloud of language were that (1) a larger span is associated with faster, more skilled readers,[23] (2) a shorter span is associated with greater stimulus-difficulty,[24] and (3) the span appears to vary according to linguistic phrasing.[25] At least eight studies into eye movement in music reading have investigated analogous issues. For example, Jacobsen (1941) measured the average span to the right in the sight singing of melodies as up to two notes for the unskilled and between one and four notes for the skilled, whose faster average tempo in that study raises doubt as to whether skill alone was responsible for this difference. In Weaver (1943:28), the eye–hand span varied greatly, but never exceeded 'a separation of eight successive notes or chords, a figure that seems impossibly large for the reading of keyboard scores. Young (1971) found that both skilled and unskilled participants previewed about one chord ahead of their hands, an uncertain finding in view of the methodological problems in that study. Goolsby (1994) found that skilled sight singers' eyes were on average about four beats ahead of their voice, and less for the unskilled. He claimed that when sight singing, 'skilled music readers look farther ahead in the notation and then back to the point of performance' (p. 77). To put this another way, skilled music readers maintain a larger eye–hand span and are more likely to refixate within it. This association between span size and leftward refixation could arise from a greater need for the refreshment of information in working memory. Furneax & Land (1999) found that professional pianists' spans are significantly larger than those of amateurs. The time index was significantly affected by the performance tempo: when fast tempos were imposed on performance, all participants showed a reduction in the time index (to about 0.7 s), and slow tempos increased the time index (to about 1.3 s). This means that the length of time that information is stored in the buffer is related to performance tempo rather than ability, but that professionals can fit more information into their buffers.[26]

Sloboda (1974, 1977) cleverly applied Levin & Kaplin's (1970) 'light-out' method in an experiment designed to measure the size of the span in music reading. Sloboda (1977) asked his participants to sight read a melody and turned the lights out at an unpredictable point during each reading. The participants were instructed to continue playing correctly 'without guessing' for as long as they could after visual input was effectively removed, giving an indication as to how far ahead of their hands they were perceiving at that moment. Here, the span was defined as including peripheral input. Participants were allowed to choose their own performing speed for each piece, introducing a layer of uncertainty into the interpretation of the results. Sloboda reported that there was a tendency for the span to coincide with the musical phrasing, so that 'a boundary just beyond the average span "stretches" the span, and a boundary just before the average "contracts" it' (as reported in Sloboda 1985:72). Good readers, he found, maintain a larger span size (up to seven notes) than do poor readers (up to four notes).

Truitt et al. (1997) found that in sight reading melodies on the electronic keyboard, span size averaged a little over one beat and ranged from two beats behind the currently fixated point to an incredibly large 12 beats ahead. The normal range of span size was rather smaller: between one beat behind and three beats ahead of the hands for 88% of the total reading duration, and between 0 and 2 beats ahead for 68% of the duration. Such large ranges, in particular, those that extend leftwards from the point of fixation, may have been due to the 'wandering effect'. For the less skilled, the average span was about half a crotchet beat. For the skilled, the span averaged about two beats and useful peripheral perception extended up to five beats. This, in the view of Rayner & Pollatsek (1997:52), suggests that:

- "a major constraint on tasks that require translation of complex inputs into continuous motor transcription is [the limited capacity of] short-term memory. If the encoding process gets too far ahead of the output, there is likely to be a loss of material that is stored in the queue."

Rayner & Pollatsek (1997:52) explained the size of the eye–hand span as a continuous tug-o-war, as it were, between two forces: (1) the need for material to be held in working memory long enough to be processed into musculoskeletal commands, and (2) the need to limit the demand on span size and therefore the workload in the memory system. They claimed that most music pedagogy supports the first aspect [in advising] the student that the eyes should be well ahead of the hands for effective sight reading. They held that despite such advice, for most readers, the second aspect prevails; that is, the need to limit the workload of the memory system. This, they contended, results in a very small span under normal conditions.

Tempo

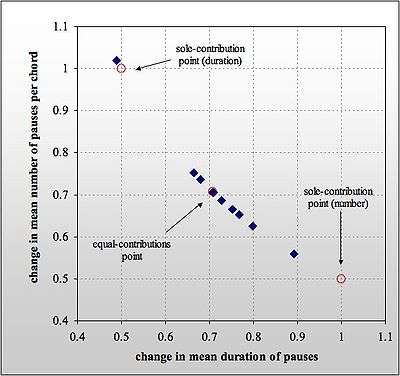

Smith (1988) found that when tempo is increased, fixations are fewer in number and shorter in mean duration, and that fixations tend to be spaced further apart on the score. Kinsler & Carpenter (1995) investigated the effect of increased tempo in reading rhythmic notation, rather than real melodies. They similarly found that increased tempo causes a decrease in mean fixation duration and an increase in mean saccade amplitude (i.e., the distance on the page between successive fixations). Souter (2001) used novel theory and methodology to investigate the effects of tempo on key variables in the sight reading of highly skilled keyboardists. Eye movement studies have typically measured saccade and fixation durations as separate variables. Souter (2001) used a novel variable: pause duration. This is a measure of the duration between the end of one fixation and the end of the next; that is, the sum of the duration of each saccade and of the fixation it leads to. Using this composite variable brings into play a simple relationship between the number of pauses, their mean duration, and the tempo: the number of pauses factored by their mean duration equals the total reading duration. In other words, the time taken to read a passage equals the sum of the durations of the individual pauses, or nd = r, where n is the number of pauses, d is their mean duration, and r is the total reading time. Since the total reading duration is inversely proportional to the tempo—double the tempo and the total reading time will be halved—the relationship can be expressed as nd is proportional to r, where t is tempo.

This study observed the effect of a change in tempo on the number and mean duration of pauses; thus, now using the letters to represent proportional changes in values,

nd = 1⁄t, where n is the proportional change in pause number, d is the proportional change in their mean duration, and t is the proportional change in tempo. This expression describes a number–duration curve, in which the number and mean duration of pauses form a hyperbolic relationship (since neither n nor d ever reaches zero). The curve represents the range of possible ratios for using these variables to adapt to a change in tempo. In Souter (2001), tempo was doubled from the first to the second reading, from 60 to 120 MM; thus, t = 2, and the number–duration curve is described by nd = 0.5 (Figure 2). In other words, factoring the proportional change in the number and mean duration of pauses between these readings will always equal ½. Each participant’s two readings thus corresponded to a point on this curve.

Irrespective of the value of t, all number–duration curves pass through three points of theoretical interest: two ‘sole-contribution’ points and one ‘equal-contribution’ point. At each sole-contribution point, a reader has relied entirely on one of the two variables to adapt to a new tempo. In Souter's study, if a participant adapted to the doubling of tempo by using the same number of pauses and halving their mean duration, the reading would fall on the sole-contribution point (1.0,0.5). Conversely, if a participant adapted by halving the number of pauses and maintaining their mean duration, the reading would fall on the other sole-contribution point (0.5,1.0). These two points represent completely one-sided behaviour. On the other hand, if a reader’s adaptation drew on both variables equally, and factoring them gives 0.5, they must both equal the square root of t (since t = 2 in this case, the square root of 2). The adaptation thus fell on the equal-contribution point:

(, ), equivalent to (0.707,0.707).

Predicting where performers would fall on the curve involved considering the possible advantages and disadvantages of using these two adaptive resources. A strategy of relying entirely on altering pause duration to adapt to a new tempo—falling on (1.0,0.5)—would permit the same number of pauses to be used irrespective of tempo. Theoretically, this would enable readers to use a standardised scanpath across a score, whereas if they changed the number of their pauses to adapt to a new tempo, their scanpath would need to be redesigned, sacrificing the benefits of a standardised approach. There is no doubt that readers are able to change their pause duration and number both from moment to moment and averaged over longer stretches of reading. Musicians typically use a large range of fixation durations within a single reading, even at a stable tempo.[27] Indeed, successive fixation durations appear to vary considerably, and seemingly at random; one fixation might be 200 ms, the next 370 ms, and the next 240 ms. (There are no data on successive pause durations in the literature, so mean fixation duration is cited here as a near-equivalent.)

In the light of this flexibility in varying fixation duration, and since the process of picking up, processing and performing the information on the page is elaborate, it might be imagined that readers prefer to use a standardised scanpath. For example, in four-part, hymn-style textures for keyboard, such as were used in Souter (2001), the information on the score is presented as a series of two-note, optically separated units—two allocated to an upper stave and two to a lower stave for each chord. A standardised scanpath might consist of a sequence of ‘saw-tooth’ movements from the upper stave to the lower stave for a chord, then diagonally across to the upper stave and down to the lower stave of the next chord, and so on. However, numerous studies[28] have shown that scanpaths in the reading of a number of musical textures—including melody, four-part hymns, and counterpoint—are not predictable and orderly, but are inherently changeable, with a certain ragged, ad-hoc quality. Music readers appear to turn their backs on the theoretical advantage of standardised scanpath: they are either flexible or ad hoc when it comes to the number of pauses—just as they are with respect to their pause durations—and do not scan a score in a strict, predetermined manner.

Souter hypothesised that the most likely scenario is that both pause duration and number are used to adapt to tempo, and that a number–duration relationship that lies close to the equal-contribution point allows the apparatus the greatest flexibility to adapt to further changes in reading conditions. He reasoned that it may be dysfunctional to use only one of two available adaptive resources, since that would make it more difficult to subsequently use that direction for further adaptation. This hypothesis—that when tempo is increased, the mean number–duration relationship will be in the vicinity of the equal-contribution point—was confirmed by the data in terms of the mean result: when tempo doubled, both the mean number of pauses per chord and the mean pause duration overall fell such that the mean number–duration relationship was (0.705,0.709), close to the equal-contribution point of (0.708, 0.708), with standard deviations of (0.138,0.118). Thus, the stability of scanpath—tenable only when the relationship is (0.5,1.0)—was sacrificed to maintain a relatively stable mean pause duration.[29]

This challenged the notion that scanpath (largely or solely) reflects the horizontal or vertical emphasis of the musical texture, as proposed by Sloboda (1985) and Weaver (1943), since these dimensions depend significantly on tempo.

Conclusions

Both logical inference and evidence in the literature point to the fact that there are three oculomotor imperatives in the task of eye movement in music reading. The first imperative seems obvious: the eyes must maintain a pace across the page that is appropriate to the tempo of the music, and they do this by manipulating the number and durations of fixations, and thereby the scanpath across the score. The second imperative is to provide an appropriate rate of refreshment of the information being stored and processed in working memory by manipulating the number and duration of fixations. This workload appears to be related to tempo, stimulus complexity and stimulus familiarity, and there is strong evidence that the capacity for high workload in relation to these variables is also connected with the skill of the reader. The third imperative is to maintain a span size that is appropriate to the reading conditions. The span must not be so small that there is insufficient time to perceive visual input and process it into musculoskeletal commands; it must not be so large that the capacity of the memory system to store and process information is exceeded. Musicians appear to use oculomotor commands to address all three imperatives simultaneously, which are in effect mapped onto each other in the reading process. Eye movement thus embodies a fluid set of characteristics that are not only intimately engaged in engineering the optimal visual input to the apparatus, but in servicing the process of that information in the memory system.[30]

Notes

- ↑ e.g., Matin (1974)

- ↑ e.g., Goolsby, 1987; Smith, 1988

- ↑ Sloboda (1985)

- ↑ Rayner et al. (1990)

- ↑ (Jacobsen 1941; Weaver 1943; Weaver & Nuys 1943; York 1951; and Lang 1961)

- ↑ Jacobsen 1941; York 1951; and Lang 1961

- ↑ Young 1971)

- ↑ Weaver (1943), York (1951) and Young (1971)

- ↑ e.g., Weaver (1943), Young (1971), Goolsby (1987)

- ↑ Souter (2001:80–81)

- ↑ Souter (2001:80–85)

- ↑ Souter (2001:90)

- ↑ Goolsby 1987:107

- ↑ Souter (2001:89–90)

- ↑ Goolsby (1987:88), assuming there were no extraneous methodological factor, such as failing to exclude from total durations the 'return-sweep' saccades and non-reading time at the start and end of readings.

- ↑ Souter (2001:91–92)

- ↑ Souter (2001:93)

- ↑ Sloboda (1985:70)

- ↑ Souter (2001:97)

- ↑ Pollatsek & Rayner (1990:153)

- 1 2 Goolsby (1987), Smith (1988)

- ↑ Souter (2001:138,140)

- ↑ e.g., Buswell (1920), Judd and Buswell (1922), Tinker (1958), Morton (1964)

- ↑ Buswell (1920), Lawson (1961), Morton (1964)

- ↑ Levin and Kaplin (1970), Levin and Addis (1980)

- ↑ Furneax S & Land MF (1999) The effects of skill on the eye-hand span during musical sight-reading, Proceedings: Biological Sciences, 266, 2435–40

- ↑ e.g., Smith (1988)

- ↑ e.g., Weaver (1943), Goolsby (1987)

- ↑ Souter (2001:137). Mean pause duration was 368 ms at slow tempo (SD = 44 ms) falling to 263 ms at fast tempo (SD = 42 ms), t(8) = 5.75, p < 0.001. Correspondingly, the mean number of pauses per chord fell from a mean of 2.75 (SD = 0.30) at slow tempo to 1.94 (SD = 0.28) at fast tempo, t(8) = 6.97, p < 0.001.

- ↑ Souter (2001:103)

References

- Drai-Zerbib V & Baccino T (2005) L'expertise dans la lecture musicale: intégration intermodale [Expertise in music reading: intermodal integration]. L'Année Psychologique, 105, 387–422

- Furneaux S & Land MF (1999) The effects of skill on the eye-hand span during musical sight-reading, Proceedings of the Royal Society of London, Series B: Biological Sciences, 266, 2435–40

- Goolsby TW (1987) The parameters of eye movement in vocal music reading. Doctoral dissertation, University of Illinois at Urbana-Champaign, AAC8721641

- Goolsby TW (1994a) Eye movement in music reading: effects of reading ability, notational complexity, and encounters. Music Perception, 12(1), 77–96

- Goolsby TW (1994b) Profiles of processing: eye movements during sightreading. Music Perception, 12(1), 97–123

- Kinsler V & Carpenter RHS (1995) Saccadic eye movements while reading music. Vision Research, 35, 1447–58

- Lang MM (1961) An investigation of eye-movements involved in the reading of music. Transactions of the International Ophthalmic Optical Congress, London: Lockwood & Son, 329–54

- Matin E (1974) Saccadic suppression: a review and analysis. Psychological Bulletin, 81, 899–917

- McConkie GW & Rayner K (1975) The span of the effective stimulus during a fixation in reading. Perception and Psychophysics, 17, 578–86

- Pollatsek A & Rayner K (1990) Eye movements, the eye-hand span, and the perceptual span in sight-reading of music. Current Directions in Psychological Science, 149–153

- O'Regan KJ (1979) Moment to moment control of eye saccades as a function of textual parameters in reading. In PA Kolers, Me Wrolstad, H Bouma (eds), Processing of Visible Language, 1, New York: Plenum Press

- Reder SM (1973) On-line monitoring ef eye position signals in contingent and noncontinent paradigms. Behaviour Research Methods and Instrumentation, 5, 218–28

- Servant I & Baccino T (1999) Lire Beethoven: une étude exploratoire des mouvements des yeux {Reading Beethoven: an exploratory study of eye movement]. Scientae Musicae, 3(1), 67–94

- Sloboda JA (1974) The eye–hand span—an approach to the study of sight reading. Psychology of Music, 2(2), 4–10

- Sloboda JA (1985) The musical mind: the cognitive psychology of music. Oxford: Clarendon Press

- Smith DJ (1988) An investigation of the effects of varying temporal settings on eye movements while sight reading trumpet music and while reading language aloud. Doctoral dissertation, UMI 890066)

- Souter T (2001) Eye movement and memory in the sight reading of keyboard music. Doctoral dissertation, University of Sydney (pdf download)

- Truitt FE, Clifton C, Pollatsek A, Rayner K (1997) The perceptual span and the eye–hand span in sight reading music. Visual cognition, 4(2), 143–61

- Weaver HA (1943) A survey of visual precesses in reading differently constructed musical selections. Psychological Monographs, 55(1), 1–30

- York R (1952) An experimental study of vocal music reading using eye movement photography and voice recording. Doctoral dissertation, Syracuse University

- Young LJ (1971) A study of the eye-movements and eye-hand temporal relationships of successful and unsuccessful piano sight-readers while piano sight-reading. Doctoral dissertation, Indiana University, RSD72-1341